In the word of audio production, one will often encounter the term, “mastering.” This term originated in the era of vinyl record pressing where a specialist would transfer the final master tape recording from the mix engineer through a series of processes to “cut” or lathe a master disc to press the album. The engineer responsible for this process ensured that the frequencies and overall levels of the recording were consistent and within specifications that would make the record playable and reasonably consistent with other records on consumer playback. Too much signal and the grooves would be too wide or cause distortion. Too much energy in the wrong frequency could cause tracking errors, or the stylus on consumer playback to “jump” out of the groove. Mastering was a complex game of working around the significant limitations of the average consumer record player. As a result, if the engineer was too cautious with steep EQ cuts, vinyl pressings often were a pale reflection of the original mixes.

After the advent of magnetic tape, the mastering process expanded into multiple steps where the stereo “master tape” from the balance/mix engineer would be approved by the artist/producer and a second engineer would collaborate with Artist & Repertoire management and the producers to make further adjustments to the general equalization, loudness via dynamic compression and limiting, “sweetening” (in the form of added harmonic distortion or even reverb) to meet their preferred criteria of a quality product. This often required one step of generation loss from the analog tape of the mix, through mastering processors into a second analog tape recorder.

When digital machines entered the market in the late 70s and early 80s, their earliest applications were in mastering, where their “lossless” capture potential eliminated additional analog generation loss during this step. Those of us who lived through the 80s and 90s recall the vast re-issuance of analog recordings as “digital remasters” on CDs that afforded a revision of this “mastering” step to maximize the excellent fidelity of the new 16 bit 44.1 digital format, and in many cases, take advantage of improved post-production processes and technologies. It was not just a marketing gimmick: there were significant compromises in the record pressing processes of the 1950s, 60s & 70s. Stories abound of how many masters for vinyl by salary label staff from the 60s and 70s had zero input or oversight by the producers or artists, and drastically altered the original mixes for the worse. One can imagine some schlub in a polyester shirt with a cigarette dangling from his mouth, cranking through a stack of masters every day as if pushing paper for the IRS.

By the 1970s and especially in the 1980s, mastering engineers like Bob Katz & Bob Ludwig became highly sought specialists who served to take a recording to “a new level” of quality using state of the art post-processors, or potentially salvage a mix that the record label felt was unsatisfactory. While there’s no shortage of analog and vinyl purists today, it’s easy to forget that the dawn of the CD era was a renaissance for the average audiophile. The compact disc (or lossless 16/44.1 FLAC) remains my format of choice for quality playback.

Mastering Is Overloaded

Pun intended. Mastering has evolved to the point in modern workflows that the term “mastering” is overloaded. It may mean different things today, depending on the use case. Because it’s ill-defined, and because there are ongoing breakthroughs in AI-assisted and algorithmic software tools, perhaps it’s more important than ever to stop arguing about what it is or isn’t and cover the spectrum of how it’s conditionally applied in engineering workflows and the situations where the separation of concerns is practical or collaborative.

By the 90s, what originated as a highly specialized technical step requiring expensive precision equipment to transfer a tape to a record lathe was unnecessary. The specifications of the digital consumer format from the Compact Disc onward to mp3, mp4, ALAC & FLAC were simple. Any engineer could print a digital master for market. They simply had to ensure it didn’t clip (exceed the mathematical limits of the format) using a safety limiter and was comparably loud vs. other compact disc recordings on the market using compression or limiting. Nonetheless, “mastering” had by then become a buzz word in the record-production marketplace and cemented into the traditional process, incentivized by A&R management as an added layer of both quality control and artistic intervention. This was complicated by the traditional methodologies taught in the audio engineering courses to track and mix conservatively with maximum headroom vs. noisefloor – never a bad approach to achieve great fidelity, but widely dismissed by professionals in the real world to get a specific sound.

Mastering As Collaborative Mixing

Simultaneously, as the barriers to entry to production all but collapsed thanks to the DAW and a relentlessly competitive race to maximize value to the vast prosumer market, a huge population of independent artist / producers emerged with variable experience and quality of equipment. Great ideas and excellent independent works emerged from enthusiasts with a few mics, a converter and a laptop. Since the attributes of “fidelity” are largely subjective and genre-dependent (lo-fi is an intentional artistic choice) the arguments about objective quality and fidelity frankly have a ring of insecurity and desperation, applying subjective criteria or attempts at occupational justification.

It boils down to two simple criteria.

- A. Is the producer (artist, label, et al) able to achieve their desired results independently without help?

- B. Does the program translate satisfactorily across the target delivery systems?

Are you achieving the results you want?

If the answer is yes, great, move on. If you have doubts (keeping in mind the entire pro audio industry marketing apparatus depends on exploiting your doubts and insecurities) or know that your product does not achieve the sonic goals you’re trying to achieve when you compare it against your chosen benchmark of quality, then you have a problem that needs to be solved.

You’re the artist. You have a mix you like. The broad strokes are how you want them, but it’s not quite there. This is well known as the 80/20 problem. This is where our overloaded term, “mastering” typically enters the production discussion. At this point you have options, depending on your budget. I’ve tried, or have worked on projects that have tried all of them at various points in my journey.

- Use AI mastering. Services like LANDR are good and getting better every year. If nothing else, the AI algo will ensure that a decent indie mix translates reasonably well across most devices — that the broad equalization isn’t too shrill or boomy. If this is an indie release through Spotify that you’re promoting to your 100 followers on (insert social media platform here), you’re effectively peeing in a digital ocean anyway. It doesn’t matter as much as the overall quality of the performance and song.

- DIY – Invest more time and money into better monitoring, acoustic treatment, high-end mixing headphones and an instructive mastering AI tool to help you understand how you can improve your mix balance.

- Stem Mastering – employ a professional collaborator to polish the broad strokes with a reduced choice set by providing a set of stems of the main groups. This is a value-optimized choice where a professional can both efficiently polish the rough edges and provide a great final product in one step without deviating from the artistic choices in your mix. Having the components, usually grouped like bass, drums, vocals, and instruments allows the mastering engineer far more control over problems with a few relatively efficient steps and fewer iterations when there’s an issue with your mix.

- Traditional Mastering – This is where things can get a bit tricky. A reputable traditional mastering engineer is accustomed to receiving quality work from professional mix engineers. They usually should assume that the decisions, however sonically egregious or unusual, were approved by the artist and or producer. They are ultimately responsible for quality control, polish and pushing the quality forward by 5-10%, especially if they are offering affordable services. Attempting to compensate for major mix issues requires significant compromises on their part that are better suited to mix revisions, which means delays and having to potentially insult the client by asking tough questions.

- Hire a mix engineer – it’s SOP (Standard Operating Procedure) in the modern professional workflow for the producer (tracking engineer) to send their entire project or exports of every track with a reference mix for a professional to mix. There are excellent reasons for doing this, but because it’s inherently labor intensive, it usually isn’t cheap.

The New Role of AI In Audio Engineering

Artificial Intelligence (or trained machine learning algorithms) are rapidly changing the landscape of audio production. Their practical application is best classified as a “tool” with rapidly expanding potential as an intelligent reference and instructor. Reference is mission critical in audio and has always been a challenge, even for the most seasoned veterans. Human hearing and psychoacoustics are complicated by many factors including frequency specific hearing loss, general health, blood pressure, ear-wax, time-based fatigue, selective attention, deceptive comparison and the list goes on. The quality of what a mix engineer is listening through is just as important as their experience and familiarity with reference material on that specific kit. Critical listening skills take years of practice and AI assisted tools provide both a way to objectively analyze a given program against a baseline and provide quantitative feedback on specifics that may represent specific potential problems to improve upon. If a mastering analysis tool is applying >2 db of correction to any part of the frequency spectrum, that’s highly instructive BUT only sensible in the context of the artistic work that the AI does not comprehend. If you’re consistently getting feedback from your AI on your mixes in the same frequency ranges, then you probably have fundamental problems in your mixes or monitoring that you should address. AI does many things well, but the current generation is still operating on data sets that represent averages and guessing genre-based curve analysis. Unlike an experienced mastering engineer, an AI is not going to provide valuable suggestions when a specific element in your mix is too loud, too quiet, is masked by another element, needs more transient attack, or has sibilants that will sound like crap on 15% of consumer equipment.

What’s more is these mastering reference tools expand the potential for great mixing via headphones, something that is highly desirable for the modern producer working on the go. Most mix engineers agree that headphones are invaluable for spot checking, quick fixes and detail work, but challenging or impossible to balance a mix with, because what sounds great on headphones often fails to translate to speakers in an acoustic space due to the characteristics of ambient cross-feed and center-field perception. Once a mix engineer gains intimate familiarity with the sound of a headset through hours of reference listening, tools like Izotope’s Ozone or Logic’s mastering assistant, combined with a bit of crossfeed or tools like Sonarworks SoundID or Sienna (available in a free edition) can help tackle the tricky decisions related to low end and center of field balance without the need for expensive monitors and acoustically treated and tuned listening spaces. Professionals today are relying more on these tools so they can meet project deadlines anywhere — at home or in hotel rooms while travelling.

Mastering as QA and release preparation

The world’s highest paid mastering engineers often receive tracks where they pull up each song by the mix engineer and apply this incredibly challenging process to prepare it for release:

Nothing. Nada. Zilch.

That’s right. Sometimes a track is perfect and needs nothing. These engineers are so good they resist the urge to put their fingerprints on it. They have years of critical listening in the best listening spaces under their belts and with zen master like grace, they do nothing and get paid for it. Given these mastering engineers are working with the best mix engineers, it’s probably more common than we think.

At minimum, the role of mastering has always been the final step of quality control. Mix engineers are human and make mistakes. A mastering engineer is an experienced critical listener. Every professional manufacturing process should employ a step of quality assurance, music production for distribution, physical or digital included.

Otherwise, a great mastering engineer is responsible for carefully listening to the final product to check for issues and ensure the entire collection hits the client’s requested LUFS target as transparently as possible. In the cases where they are working with top pro mixers, they are often making 0.5db broad EQ moves for continuity. In extreme cases, mastering engineers are dealing with bad mixes or mixes from many different sessions and mixers of varying quality, which requires more care to define a continuous signature using EQ, limiting and compression. Many top pop mix engineers are sending mixes that are already loudness maximized to taste with a bit of reserve headroom for alteration, because the best mix engineers understand that the most accurate control of precision density comes during the mix step, not the master. This is increasingly known as “top down” method.

If a mastering engineer is hard limiting a mixer’s work by 3-5db to achieve targets, it means that the mix engineer

- granted the mastering engineer a tremendous amount of creative control (not a bad thing), as it’s not uncommon in situations where the mix engineer had mixed into or regularly referenced a target limiting/compression stack and removed it as a professional collaborative courtesy, and sent their original version as reference.

- intended the recording to have a lot of dynamic range and there’s a miscommunication somewhere.

- It’s an old recording when dynamic range was (gasp) desirable (and it was)

- The mix engineer is a noob and didn’t know what a master buss was

Loudness is Taste

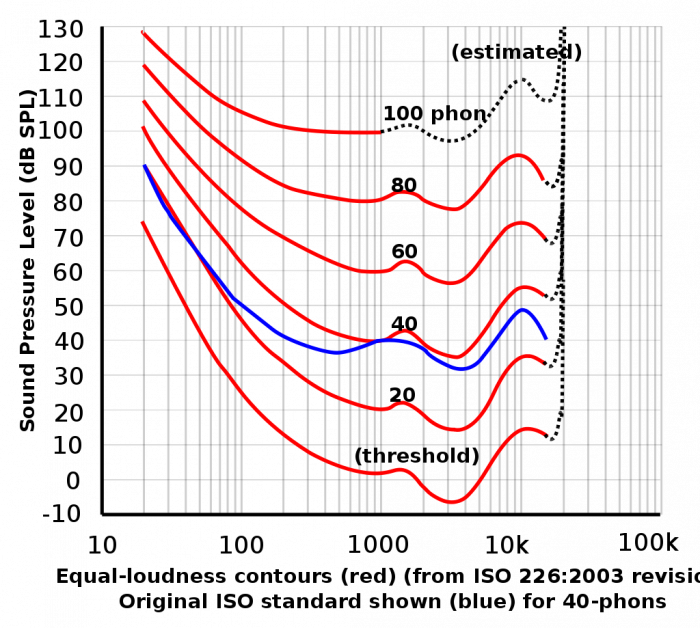

In the modern digital streaming era, platforms solve the age old problem of normalizing relative loudness across different program material with an algorithm, typically utilizing a standard called LUFS, which stands for Loudness Units relative to Full Scale. It’s a standardized measurement of audio loudness that factors both psycho-acoustics and electrical signal intensity together to measure perceived loudness, because musical program can be highly dynamic, perceived loudness varies by frequency and simple measures like average or peak decibel levels are insufficient.

This normalization is good for the consumer experience but has caused quite a bit of confusion. LUFS measures something that seems psycho-acoustically esoteric and difficult to understand, but isn’t: how loud something sounds compared with something else. Much hand-wringing has flooded the Internet about whether or not a recording should target a specific LUFS recommendation, which often misses the point. The fear seems to be that targeting a ridiculously low LUFS of -14 is necessary so that your song won’t be perceptively quieter than other songs. NO. WRONG ANSWER. Normalization normalizes by definition. The typical processes that increase / maximize loudness also alter the character of the program material and have other highly practical attributes with funny names like “glue,” “gel,” “sizzle” or “density”. This is purely a matter of taste. It’s also a matter of fact that, within reason, loudness maximization through compression, limiting and clipping translates better in pop music genres across a wider range of consumer playback equipment in a wide range of listening scenarios (like in a noisy environment), even when it is compensated for by the platform algorithm by reducing the gain/volume.

The wonderful benefit of loudness normalization is that it finally ended the loudness wars, and now you can enjoy your highly dynamic jazz, classical and chamber music on the same playlist as your pop music without adjusting the volume. It’s a tremendous opportunity for artists to take advantage of dynamic range again, because dynamics are musical!